Observability with Langfuse

This tutorial covers how to integrate goose with Langfuse to monitor your goose requests and understand how the agent is performing.

What is Langfuse

Langfuse is an open-source LLM engineering platform that enables teams to collaboratively monitor, evaluate, and debug their LLM applications.

Set up Langfuse

Sign up for Langfuse Cloud or self-host Langfuse Docker Compose to get your Langfuse API keys.

Configure goose to Connect to Langfuse

Set the environment variables so that goose (written in Rust) can connect to the Langfuse server.

export LANGFUSE_INIT_PROJECT_PUBLIC_KEY=pk-lf-...

export LANGFUSE_INIT_PROJECT_SECRET_KEY=sk-lf-...

export LANGFUSE_URL=https://cloud.langfuse.com # EU data region 🇪🇺

# https://us.cloud.langfuse.com if you're using the US region 🇺🇸

# https://localhost:3000 if you're self-hosting

Run goose with Langfuse Integration

Now, you can run goose and monitor your AI requests and actions through Langfuse.

With goose running and the environment variables set, Langfuse will start capturing traces of your goose activities.

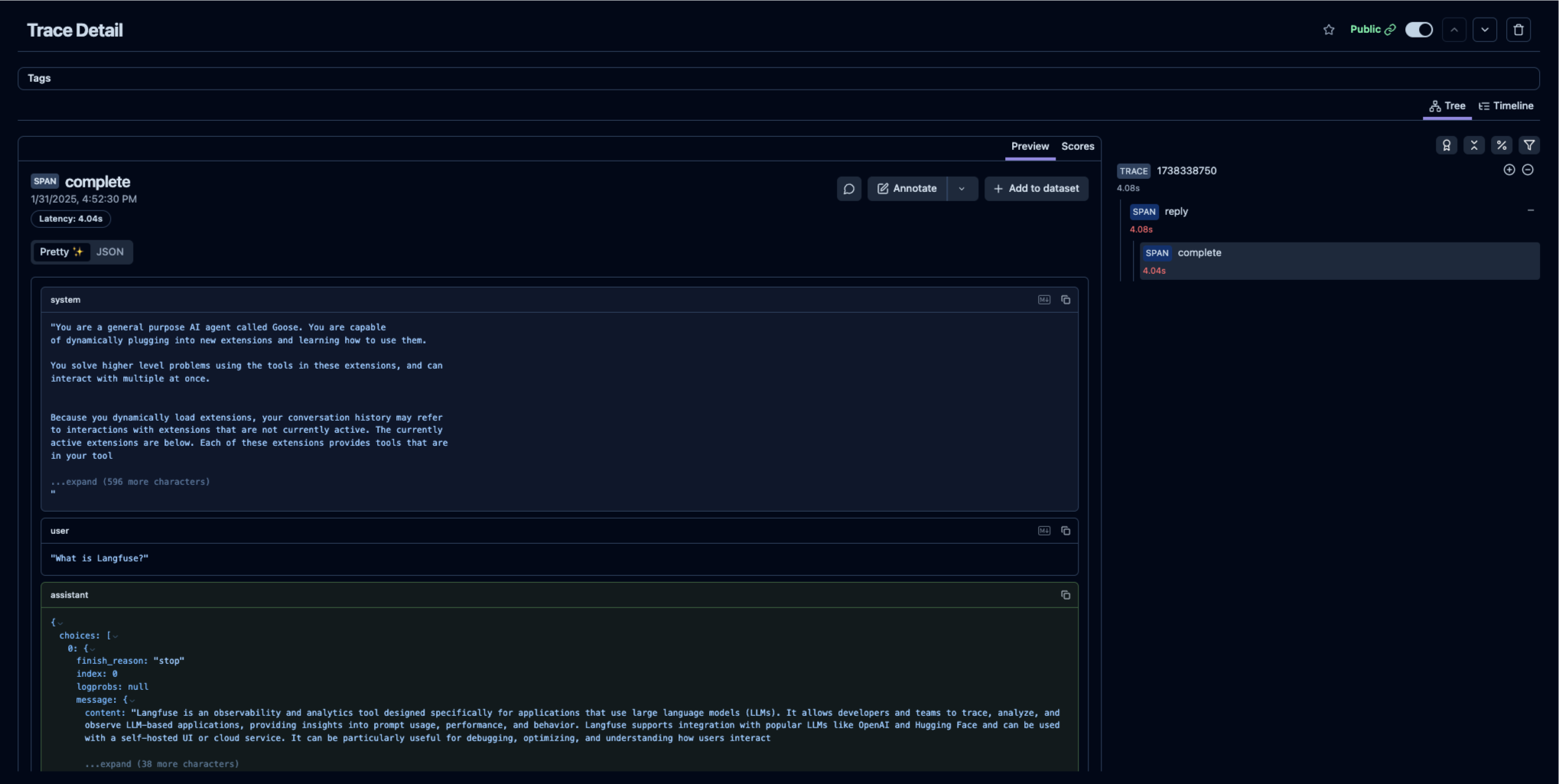

Example trace (public) in Langfuse