MCP UI: Bringing the Browser into the Agent

Goose recently released support for MCP-UI which allows MCP servers to suggest and contribute user interface elements back to the agent.

MCP-UI is still an open RFC being considering for adoption into the MCP spec. It works as is but may change as the proposal advances.

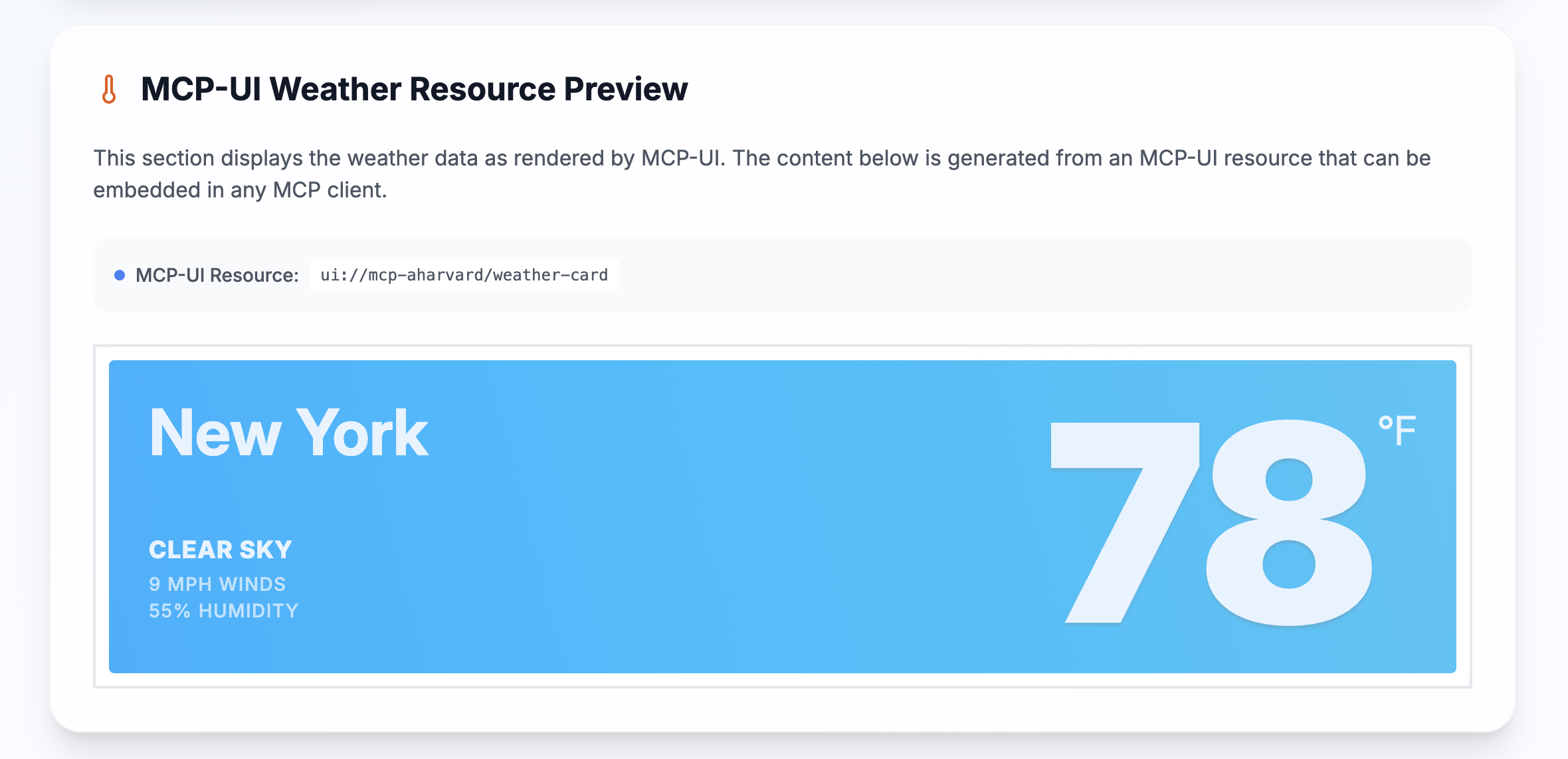

MCP-UI sits on top of the protocol, but instead of text/markdown being the result, servers can return content that the client can render richly (including interactive GUI content).

Many everyday activities that agents undertake could benefit from a graphical representation. Sometimes this is done by the agent rendering a GUI on its own (I know I do that a lot), but this allows it to be more intrinsic to extensions for cases where interaction is best done graphically with a human. It also naturally (hence the Shopify connection) works well with commerce applications where you want to see the product!

It is worth taking a minute to watch this MCP server for an airline seat selector demo to get a sense of the capability:

Essentially, MCP servers are suggesting GUI elements for the client (agent) to render as it sees fit.

How do I use this

Starting from Goose v1.3.0, you can add MCP-UI as an extension.

- goose Desktop

- goose CLI

Use goose configure to add a Remote Extension (Streaming HTTP) extension type with:

Endpoint URL

https://mcp-aharvard.netlify.app/mcp

Take a look at MCP-UI demos provided by Andrew Harvard. You can also check out his GitHub repo which has samples you can start with.

The tech behind MCP-UI

At the heart of MCP-UI is an interface for a UIResource:

interface UIResource {

type: 'resource';

resource: {

uri: string; // e.g., ui://component/id

mimeType: 'text/html' | 'text/uri-list' | 'application/vnd.mcp-ui.remote-dom'; // text/html for HTML content, text/uri-list for URL content, application/vnd.mcp-ui.remote-dom for remote-dom content (JavaScript)

text?: string; // Inline HTML, external URL, or remote-dom script

blob?: string; // Base64-encoded HTML, URL, or remote-dom script

};

}

The mimeType is where the action happens. It can be HTML content, for example (in the simplest case).

Another key tech at play here is Remote DOM, which is an open source project from Shopify. It lets you take DOM elements from a sandboxed environment and render them in another one, which is quite useful for agents. This also opens up the possibility that the agent side can render widgets as it needs (i.e., with locally matching styles or design language).

Possible futures

It's still early days for MCP-UI, so the details may change, but that is part of what makes experimenting with it exciting right now.

MCP-UI will continue to evolve, and may pick up more declarative ways for MCP-UI servers to specify they need forms or widgets of certain types, but without specifying the exact rendering. How nice would it be to be able to specify these components and let the agent render it beautifully, be that in a desktop or mobile client, or even a text UI in a command line!